Remote builds with AWS Spot Instances

Tags: selfhosted

Occasionally I contribute to open-source projects. Some of these projects are really big and compiling them takes a long time. This is usually not a problem in industry: either you’ll get an expensive desktop machine or remote build access to a fleet of expensive “build bot” machines.

Unfortunately I don’t have either of those – my laptop is nine years old and was nowhere near state-of-the-art when I bought it. Compiling a large open-source project like Node.js from scratch takes hours.

Anyway I used to get around this by having a beefy 32-core Amazon EC2 instance in the cloud. I would turn it on only when I was using it, and there is a crontab configured to automatically shut-off the instance every night in case I forget. There is a monthly charge for the disk storage, but it’s comparatively cheap. But even with careful stopping and starting of the instance, it ends up being more expensive than I would like. I spend a lot of time reading and writing code, and not compiling it. But regardless of whether the CPUs are completely idle or spinning at 100%, I’m paying Amazon multiple dollars per hour.

In addition to their on-demand instances, AWS provides “spot instances”, which can be up to 90% cheaper. In exchange, you have to deal with “interruptions”, where AWS can decide to terminate your instance in order to get extra capacity.

I decided to create a “remote runner” tool. The idea is that I would run

remote_run make test, and it would:

- Start up a spot instance.

- Send it the

make testcommand and show me the results locally. - Have the spot machine linger around for a while (in case I have a quick follow-up command) before shutting down.

Boring nitty gritty details

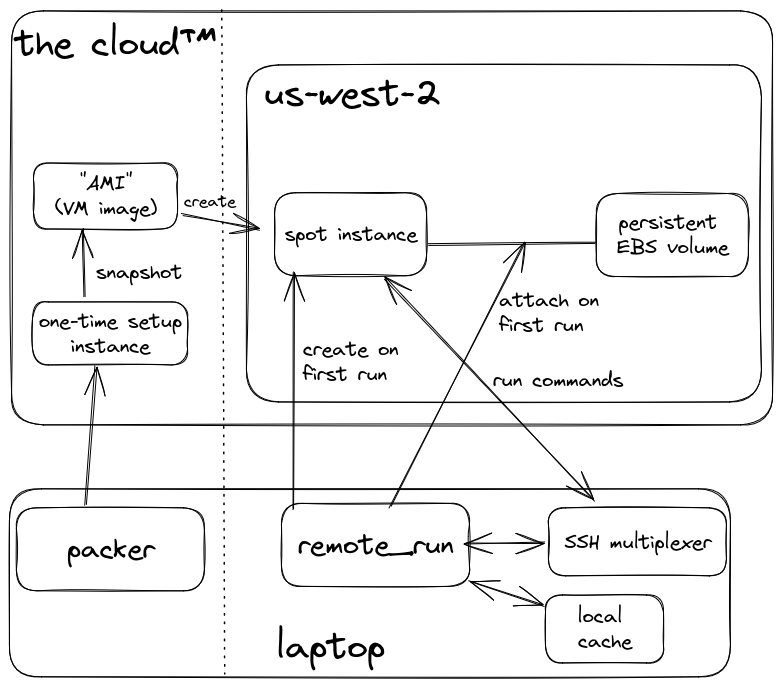

This ended up being much trickier than I imagined, mostly because I had a simplistic model of how AWS worked. Here’s what I ended up building in practice:

First, there are various build dependencies which need to be on the root disk in order to actually do the build. We could install them whenever we run a command but that would add significantly to the start time. Instead we build an “AMI”, which is Amazon speak for a virtual machine image. I used Hashicorp’s Packer to do this. This is a “one-time” setup, although you’ll have to do it “one-more-time” whenever you add new build dependencies.

Next, we need to store the build artifacts and repositories somewhere. This was easy for on-demand instances, because there was a single instance and it could just have an EBS volume attached. For spot instances this ended up being tricky: you need to manually attach the EBS volume and mount it on the machine when it starts.

At this point I was able to start actually running build commands. I

added a git push over SSH to sync up my local code to the remote,

and got my first build passing :)

The runtime for doing a small command like remote_run echo 1 was

unacceptably slow even when the spot instance was already warm: 2-3

seconds. One of those seconds was because the spot instance IP

required AWS API calls which were not fast. I built a “local cache”

which stored the last known IP. Establishing new SSH connections for

each command took an additional second, I used OpenSSH’s

multiplexing feature which brought it down to ~60ms.

Then there were a bunch of annoying edge cases, like handling running a command when a spot instance was shutting down or starting up, or trying to make the remote runner robust even when I pressed Ctrl-C halfway through the pipeline.

Annoyingly, while testing my code I started getting random errors. AWS informed me that they suspected that my account had been compromised. I suspect the average cryptomining workload looks pretty similar to this, although theirs is perhaps more economically productive.

Conclusion

The end result of all this toil:

- I can do builds really fast! My most complicated projects can do a full compile in ~minutes.

- “Cold” commands take around 20-30 seconds for the spot EC2 instance to become ready, and then degraded performance while the EBS volume warms up.

- “Warm” commands take an additional ~100ms which is very reasonable.

Against my better judgement, here is the full code. It’s a series of unprincipled hacks (and using StrictHostKeyChecking=no is dangerous), but it works well for my workflow.

Python Code

import boto3

import subprocess

import sys

import time

import os

import base64

import json

from botocore.exceptions import ClientError

from shlex import quote

from functools import wraps

from time import time, sleep

base_ssh_command = None

ec2 = boto3.client("ec2", region_name="us-west-2")

fp = "/b/v8/v8"

volume_id = "vol-xxxxxxxxxxxxxxxxx"

verbose = False

class Color:

GREEN = "\033[1;32;48m"

RED = "\033[1;31;48m"

BLUE = "\033[1;34;48m"

END = "\033[1;37;0m"

def verbose_timing(f):

@wraps(f)

def wrap(*args, **kw):

ts = time()

print(f"{Color.BLUE}running {f.__name__}{Color.END}")

error = None

try:

result = f(*args, **kw)

except Exception as e:

error = e

te = time()

diff = te - ts

if diff > 2:

diff_nice = f"{diff:2.4f} sec"

else:

diff_nice = f"{int(diff*1000)} ms"

if error:

print(f"{Color.RED}... failed in {diff_nice}{Color.END}")

raise error

else:

print(f"{Color.GREEN}... done in {diff_nice}{Color.END}")

return result

return wrap

timing = verbose_timing if verbose else lambda f: f

@timing

def get_instance_information(instance_name):

instances = ec2.describe_instances(

Filters=[

{"Name": "instance-state-name", "Values": ["pending", "running"]},

{"Name": "tag:Name", "Values": [instance_name]},

]

)

for reservation in instances["Reservations"]:

for instance in reservation["Instances"]:

return {

"public_ip": instance["NetworkInterfaces"][0]["Association"][

"PublicIp"

],

"instance_id": instance["InstanceId"],

"has_volume": any(

(

bdm["Ebs"]["VolumeId"] == volume_id

for bdm in instance["BlockDeviceMappings"]

)

),

"state": instance["State"]["Name"],

}

@timing

def request_spot_instance(instance_name, instance_type):

request = {

"MaxCount": 1,

"MinCount": 1,

"ImageId": "ami-xxxxxxxxxxxxxxxxx",

"InstanceType": instance_type,

"InstanceInitiatedShutdownBehavior": "terminate",

"KeyName": instance_name,

"EbsOptimized": True,

"UserData": base64.b64encode(

b'#!/bin/bash\necho "shutdown -h now" | at now + 5 min\n'

).decode("utf8"),

"NetworkInterfaces": [

{

"SubnetId": "subnet-xxxxxxxxxxxxxxxxx",

"AssociatePublicIpAddress": True,

"DeviceIndex": 0,

"Groups": ["sg-xxxxxxxxxxxxxxxxx"],

}

],

"TagSpecifications": [

{

"ResourceType": "instance",

"Tags": [{"Key": "Name", "Value": "build-bot"}],

}

],

"InstanceMarketOptions": {"MarketType": "spot"},

"MetadataOptions": {

"HttpTokens": "required",

"HttpEndpoint": "enabled",

"HttpPutResponseHopLimit": 2,

},

"PrivateDnsNameOptions": {

"HostnameType": "ip-name",

"EnableResourceNameDnsARecord": False,

"EnableResourceNameDnsAAAARecord": False,

},

}

response = ec2.run_instances(**request)

return response["Instances"][0]["SpotInstanceRequestId"]

@timing

def wait_for_spot_instance_request(spot_request_id):

while True:

response = ec2.describe_spot_instance_requests(

SpotInstanceRequestIds=[spot_request_id]

)

state = response["SpotInstanceRequests"][0]["State"]

if state == "active":

instance_id = response["SpotInstanceRequests"][0]["InstanceId"]

return instance_id

elif state == "failed":

raise Exception("Spot instance request failed.")

sleep(1)

@timing

def attach_volume(instance_id):

last_error = None

for retries in range(60):

try:

response = ec2.attach_volume(

Device="/dev/sdf", InstanceId=instance_id, VolumeId=volume_id

)

break

except ClientError as e:

str_e = str(e)

if "(VolumeInUse)" in str_e or "is not 'running'." in str_e:

last_error = e

else:

raise e

sleep(1)

else:

raise last_error

return response

@timing

def mount_when_ready(public_ip, key_file_path):

ssh_command = f"{base_ssh_command} ubuntu@{public_ip} 'mountpoint /b >/dev/null || {{ sudo mount /dev/nvme1n1 /b || sudo mount /dev/sdf /b || sudo mount /dev/xvdf /b; }}'"

while subprocess.call(ssh_command, shell=True) != 0:

sleep(0.1)

@timing

def sync_changes_to_git():

subprocess.check_call(

'git add -u && git commit --allow-empty -m"for build"', shell=True

)

return subprocess.check_output(["git", "rev-parse", "HEAD"]).strip().decode("utf-8")

@timing

def push_git_sha(public_ip, key_file_path, git_sha):

ssh_command = (

f"git push -f ssh://ubuntu@{public_ip}{fp} HEAD:refs/heads/for-spot-build"

)

subprocess.check_call(ssh_command, shell=True)

@timing

def setup_build(public_ip, key_file_path, git_sha):

ssh_command = (

f"{base_ssh_command} ubuntu@{public_ip} 'cd {fp} && git checkout -q {git_sha}'"

)

subprocess.check_call(ssh_command, shell=True)

def run_command(public_ip, key_file_path, user_command):

ssh_command = f"{base_ssh_command} ubuntu@{public_ip} {quote(user_command)}"

try:

subprocess.call(ssh_command, shell=True)

except KeyboardInterrupt:

return

@timing

def rsync_from_remote(public_ip, key_file_path, remote_path, local_path):

rsync_command = f"rsync --info=progress2 -a -r -e '{base_ssh_command}' ubuntu@{public_ip}:{remote_path} {local_path}"

subprocess.check_call(rsync_command, shell=True)

def load_build_bot_from_cache(cache_directory):

return json.load(open(cache_directory + "/build_bot.json", "r"))

def store_build_bot_cache(cache_directory, build_bot):

try:

os.makedirs(cache_directory)

except OSError as e:

from errno import EEXIST

if e.errno != EEXIST:

raise

return json.dump(build_bot, open(cache_directory + "/build_bot.json", "w"))

def is_build_bot_alive(public_ip):

shutdown_command = reschedule_shutdown_command(10)

ssh_command = f"{base_ssh_command} -o ConnectTimeout=3 ubuntu@{public_ip} {quote(shutdown_command)} >/dev/null 2>/dev/null"

try:

subprocess.check_call(ssh_command, shell=True)

return True

except subprocess.CalledProcessError:

return False

@timing

def invalidate_build_bot_cache(cache_directory):

from shutil import rmtree

if "/build-bot/" not in cache_directory:

raise Exception("failing because cache directory looks wrong")

rmtree(cache_directory)

def reschedule_shutdown_command(minutes):

# Only cancel existing shutdown jobs after we've scheduled a new

# shutdown, so that there's always a shutdown scheduled even in the

# face of incoming interrupts.

return f'sudo bash -c "to_cancel=$(atq | cut -f 1); echo shutdown -h | at now + {minutes} min; echo "$to_cancel" | xargs atrm; exit 0" >/dev/null 2>/dev/null'

if __name__ == "__main__":

build_bot = None

key_file_path = "~/.ssh/build-bot.pem"

base_ssh_command = f"ssh -i {key_file_path} -o StrictHostKeyChecking=no -o ControlMaster=auto -o ControlPath=/tmp/%C -o ControlPersist=10m"

instance_name = "build-bot"

instance_type = "c7a.8xlarge"

cache_directory = os.path.expanduser("~/.cache/build-bot/")

if os.path.isdir(cache_directory):

build_bot = load_build_bot_from_cache(cache_directory)

for retries in range(60):

if is_build_bot_alive(build_bot["public_ip"]):

break

sleep(1)

if get_instance_information(instance_name) is None:

# The build bot went away (as expected).

invalidate_build_bot_cache(cache_directory)

build_bot = None

break

else:

raise Exception("build bot is present but not responsive")

user_command = " ".join(sys.argv[1:])

local_out_directory = "~/v8/v8/out/x64.release"

commands_to_run = [f"cd {fp}", "export PATH=/b/depot_tools:$PATH"]

os.environ["GIT_SSH_COMMAND"] = base_ssh_command

if build_bot is None:

build_bot = get_instance_information(instance_name)

if build_bot is None:

spot_request_id = request_spot_instance(instance_name, instance_type)

instance_id = wait_for_spot_instance_request(spot_request_id)

for retries in range(60):

try:

sleep(1)

build_bot = get_instance_information(instance_name)

if build_bot is not None:

break

except KeyError: # network not ready yet...

pass

else:

raise Exception("spot instance request fulfilled but no bot")

public_ip = build_bot["public_ip"]

if not build_bot["has_volume"]:

instance_id = build_bot["instance_id"]

attach_volume(instance_id)

build_bot["has_volume"] = True

if not build_bot.get("has_mount"):

mount_when_ready(public_ip, key_file_path)

build_bot["has_mount"] = True

if "gm.py" in user_command:

git_sha = sync_changes_to_git()

push_git_sha(public_ip, key_file_path, git_sha)

commands_to_run.append(f"git checkout {git_sha} >/dev/null")

commands_to_run.append(user_command)

commands_to_run.append(reschedule_shutdown_command(3))

run_command(public_ip, key_file_path, ";".join(commands_to_run))

store_build_bot_cache(cache_directory, build_bot)

# add as necessary

# rsync_from_remote(public_ip, key_file_path, f"{fp}/out/x64.release/", local_out_directory)Packer Code

packer {

required_plugins {

amazon = {

version = ">= 0.0.1"

source = "github.com/hashicorp/amazon"

}

}

}

source "amazon-ebs" "ubuntu" {

ami_name = "build-bot-ami"

instance_type = "c7a.xlarge"

region = "us-west-2"

source_ami_filter {

filters = {

name = "ubuntu/images/*ubuntu-focal-20.04-amd64-server-*"

root-device-type = "ebs"

virtualization-type = "hvm"

}

most_recent = true

owners = ["099720109477"]

}

ssh_username = "ubuntu"

}

build {

name = "build-bot"

sources = [

"source.amazon-ebs.ubuntu"

]

provisioner "file" {

source = "../build-bot.pub"

destination = "/tmp/build-bot.pub"

}

provisioner "shell" {

script = "setup.sh"

}

}

setup.sh Shell Code (used by Packer)

#!/bin/bash

set -Eeuox pipefail

# Update & upgrade all packages.

export DEBIAN_FRONTEND=noninteractive

sudo apt-get update -y

sudo apt-get -y -o Dpkg::Options::="--force-confdef" \

-o Dpkg::Options::="--force-confold" dist-upgrade

sudo apt-get update

# Install the SSH key.

mkdir -p ~/.ssh

chmod 700 ~/.ssh

cp /tmp/build-bot.pub ~/.ssh/authorized_keys

chmod 600 ~/.ssh/authorized_keys

# Some dependencies I use.

sudo apt-get -y -qq install ninja-build ccache

# Dependencies for V8/Chromium.

curl https://chromium.googlesource.com/chromium/src/+/main/build/install-build-deps.py?format=TEXT \

| base64 -d > /tmp/install_build_deps.py

sudo python3 /tmp/install_build_deps.py

# By default you can't log in while the machine is booting or

# shutting down. Comment that out - lets us SSH in faster.

sudo sed -i '/account[[:space:]]*required[[:space:]]*pam_nologin.so/s/^/#/' /etc/pam.d/sshd

# Add the mountpoint.

sudo mkdir -p /b

sudo chown ubuntu:ubuntu /b

# Add some configuration files outside of the root mount.

mkdir -p ~/.ccache

cat > ~/.ccache/ccache.conf <<EOF

max_size = 32.0G

cache_dir = /b/ccache

EOF

echo . /b/.bashrc >> ~/.bashrc

echo source /b/.vimrc >> ~/.vimrcP.S. After doing all this, I’m thinking I’ll just buy a new computer.